Configuring for Performance and Resiliency

A Synergy application’s performance and resiliency can often be significantly improved by careful system design and configuration. This topic doesn’t provide details for any particular system, but it does provide general guidelines for improving performance and achieving high availability (resiliency). It contains the following sections:

- General performance recommendations

- Configuring for resiliency

- Configuring virtual machines

- Design and configuration for client/server systems

- Optimizing network data access with xfServer

- Configuring SAN for optimal data access

- Food for thought: Results from our testing

|

|

Issues with third-party products and their interactions are beyond the scope of this documentation and Synergy/DE Developer Support. A common example of this is operating system virtualization, where Synergy/DE is supported on the operating system, and the virtualization software acts as a hardware layer. We recommend that you maintain support contracts with third-party product vendors (e.g., operating system and virtualization software vendors) for assistance with these issues. |

Systems for Synergy applications can be configured in a number of ways: stand-alone (monolithic) systems, virtualized systems, and client/server configurations with data and/or processing on a server. A correctly configured stand-alone system with sufficient resources provides the best performance for Synergy applications. Such systems are very fast and adding a hot standby makes them resilient. (See Configuring for resiliency.) We recommend that Synergy applications use a stand-alone system when possible. If you find that your applications are pushing the system’s limits (too many users, intensive end-of-year processing, etc.), improve the stand-alone system, if possible, before considering moving data or processing out to the network.

General performance recommendations

For a Synergy application, optimization involves carefully configuring the hardware and software that support the application, and carefully designing and coding the Synergy application itself.

Keep the following in mind when configuring your system for Synergy applications:

- Make sure SAN drives are configured optimally (see Configuring SAN for optimal data access).

- Keep software current. Attempting to use old versions of Synergy/DE with current third-party tools (or vice versa) will result in poor performance if they work at all.

- Always configure antivirus software to exclude scanning of .is1, .ism, and .ddf files, as well as any data files you have with custom extensions.

CPU performance and sizing

- Modern processors adjust the CPU speed automatically to save power. Make sure the CPU is clocking up for your Synergy application by setting the BIOS to allow the operating system to control power management. Some BIOS power management configurations favor power saving over performance, so if the core for a Synergy application is the only heavily utilized core at any given point, the BIOS may lower the CPU’s clock speed, severely hindering performance.

- All traditional Synergy programs, including xfServerPlus server processes, are single-threaded, while Synergy .NET programs can be coded to take advantage of multiple threads. Isutl can take advantage of multiple cores for each key of the file, up to eight. Pay careful attention to CPU speed when upgrading and/or moving systems. When using systems with Intel Hyper-Threading, a quad-core system may look like an octa-core system, but it is hard to drive all eight cores to 100% without throttling the speed boost, affecting maximum core speed. In many cases better performance may be seen when disabling hyper-threading (subject to application load testing).

- Individual users of a multi-user application, a batch job, or a cron job can each use one of the available cores of a multi-core processor.

- A 4.2 GHz CPU with SpeedStep to 5 GHz and four cores can run a single traditional Synergy program three times as fast as a 1.7 GHz 12-core system with no SpeedStep. However, the 12-core system may perform slightly better in multi-user scenarios.

- Moving a physical machine to a virtual server always results in some degree of performance loss related to CPU power, especially with heavy network traffic like xfServer.

- Running isutl -r to recover a large file with two keys and large compressed records will take longer on lower-speed systems regardless of the number of cores.

Application design

A Synergy application’s design and the way it is coded can make a big difference in the way it performs. Make sure you use established best practices for programming and note the following recommendations:

- Use OPEN and CLOSE as little as possible. For example, do not open or close channels in a loop, and wait until a channel is no longer needed in the current context before closing it. Data in the system cache is written to disk when a channel that is open for update or output is released for a CLOSE, so unnecessary opening and closing of channels wastes disk subsystem bandwidth and can severely hinder performance for large workloads. (On Windows and UNIX, you may want to set system option #44, which disables flushing on a CLOSE.)

- With Synergy .NET, make sure that objects created in a loop are destroyed at the end of the loop. This includes Synergy alpha, integer, and decimal data types, which are implemented as objects. For example, if a loop uses arithmetic on a decimal type, a decimal object will be created for the results with every iteration of the loop, and .NET garbage collection may then consume a large percentage of CPU resources disposing of the leftover objects.

- For sequential READS, use LOCK:Q_NO_TLOCK for files opened in update mode when possible. This reduces disk overhead and unnecessary locking and allows caching of index blocks. But note that cached index blocks for the READS will not include records subsequently stored by other users.

- Use the OPTIONS:“/sequential” qualifier for OPEN to optimize sequential record access when using a sequential file or primary key access on an ISAM file ordered with the isutl -ro option. This improves the effectiveness of operating system caching for sequential reads.

- Use the Q_EQ option for the MATCH qualifier to prevent record locking when a record is not found and to prevent the next record from being read and acquiring a lock.

- Use I/O error list for common errors such as end of file, key not same, and record locked. I/O error lists are much faster than TRY/CATCH, especially over a network with xfServer, and they are built into READS, WRITES, and other Synergy DBL statements that have an error list argument.

- When using IOHooks, avoid performing network operations in your hook routines, such as posting a transaction to a SQL database.

- Use profiling tools to evaluate your applications’ performance and to uncover coding and design issues that could affect performance, particularly for processor-intensive applications such as programs for end-of-year processing. For Synergy .NET applications, use the Visual Studio CPU Usage tool (with managed and unmanaged code) and the Visual Studio Memory Usage tool. For traditional Synergy applications, use the Synergy DBL Profiler.

Data files

Optimally configuring and using data files can also affect performance. Note the following:

- Don’t apply disk quotas to users on a disk that has Synergy data files.

- All ISAM files should be REV 6, which is aligned to 4K for large sector drives (i.e., index blocks are read and written on 4K boundaries). This means that indexes can be shallower, which reduces the overhead required to read records randomly. Unless you have SSD drives, limit indexes to no more than three levels deep. A smaller index means less disk movement for non-SSD disks. You may be able to further improve ISAM performance (especially for large files) by increasing page size from 4K to 16K.

- Use data compression and 100% packing density for ISAM files that change very little (e.g., archive files). For other ISAM files, we recommend you run isutl -vc, which gives you an idea of the expected compression savings for a file.

- Use isutl to maintain ISAM files. ISAM file indexes and data should be periodically optimized:

- Use isutl -v to verify files.

- Use isutl -vi for the information advisor, which provides details about a file’s condition and offers advice (including performance enhancements) based on file organization and content.

- Use isutl -r to re-index the file or isutl –ro to re-index the file and reorder the data.

- If you use snapshots, use the synbackup utility to prevent file corruption and, for performance reasons, consider limiting snapshots. (For performance troubleshooting, try turning off snapshots to see if that makes a difference.)

Configuring for resiliency

The key to resiliency is redundancy. Regardless of your company’s configuration model—stand-alone, virtual, client/server—your servers should have built-in redundancy, such as dual power supplies and RAID 1 disks. Data center monitoring software (such as OpenManage Server Administrator from Dell) can alert you to the failure of any hardware component in the system so that it can be replaced before the alternate fails.

The four figures below show configurations at various levels of redundancy and therefore resiliency.

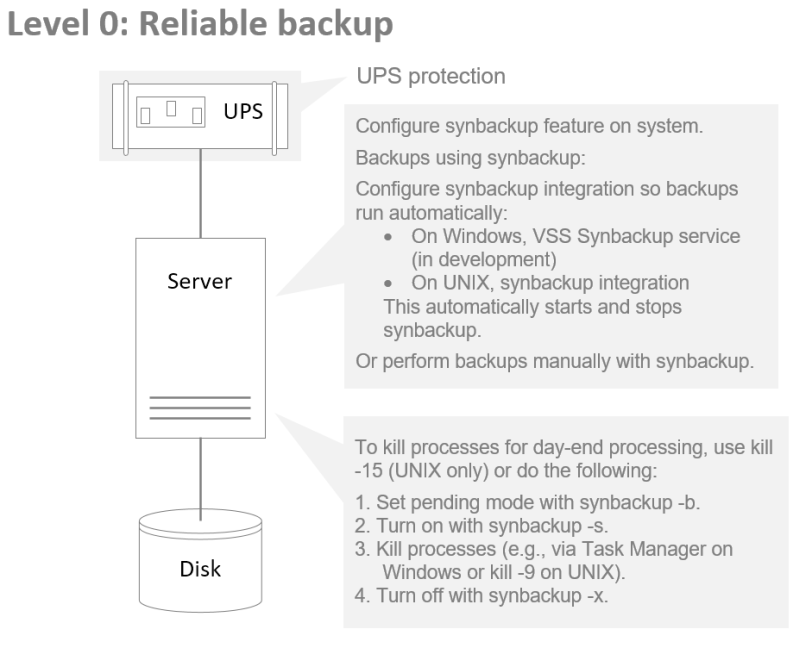

Level 0 shows a basic system that is backed up regularly using the synbackup utility. The system also has a UPS (uninterrupted power supply).

|

|

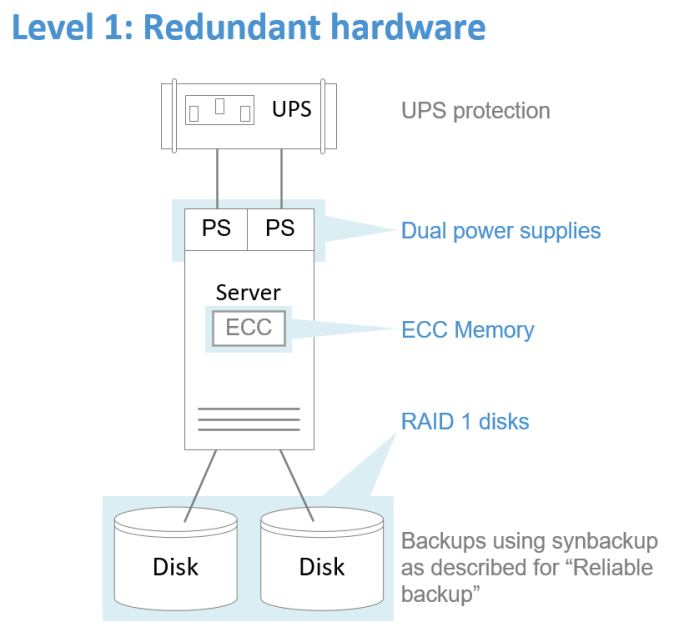

Level 1 also has the UPS, but has added dual power supplies and RAID 1 disks for use by synbackup.

|

|

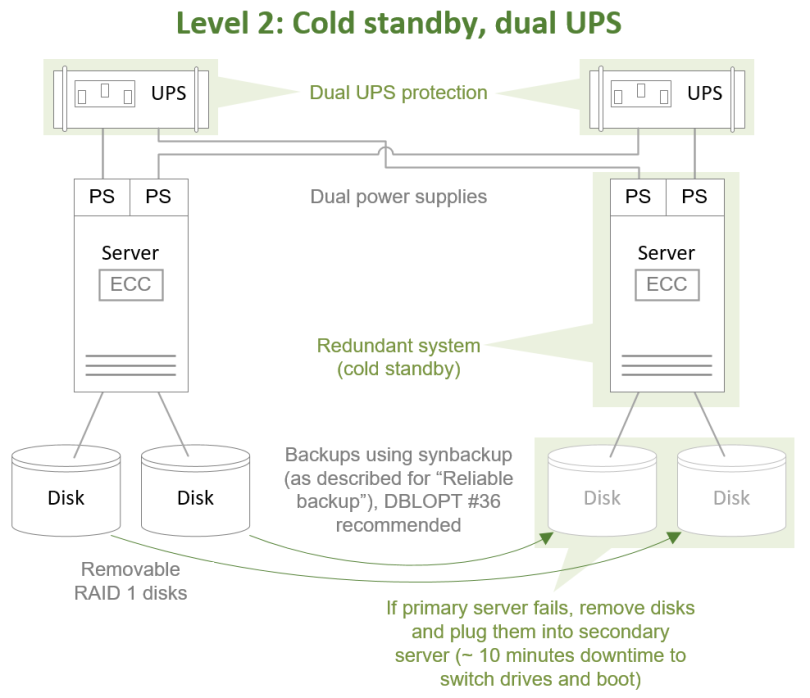

Level 2 includes a cold standby system. (A cold standby is a duplicate machine that can be ready to go once the disks are moved over and it’s powered on.) Both machines have dual power supplies. The main server is configured to make backups using synbackup to removable RAID 1 disks. If the main server fails, the disks can be removed and plugged into the cold standby machine.

|

|

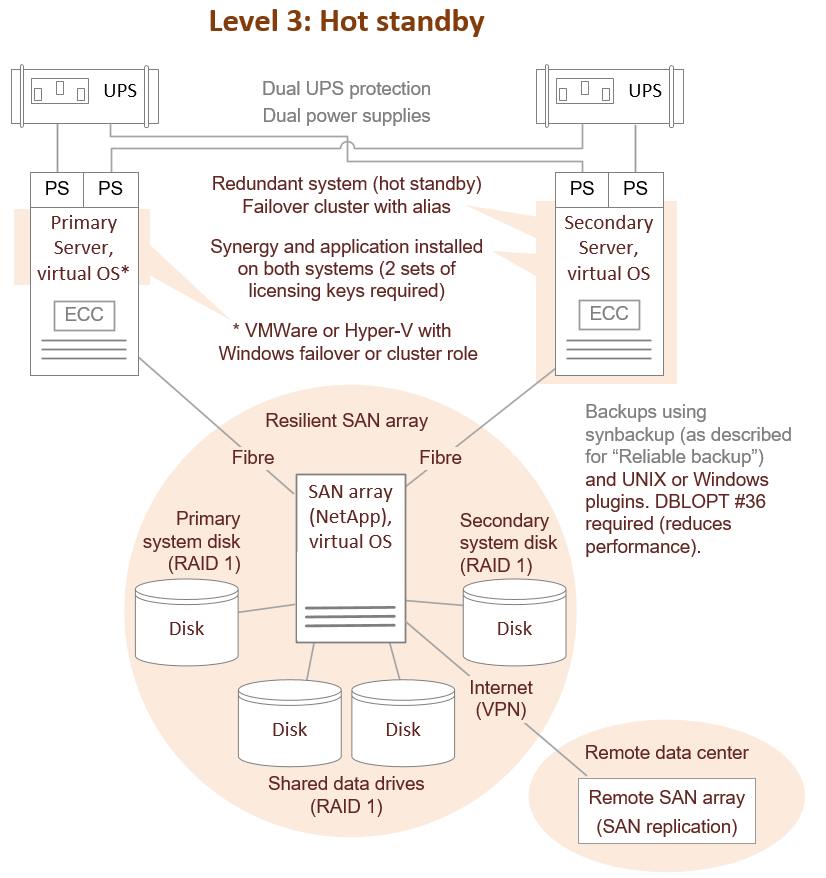

Level 3 below shows a system with a hot standby. (A hot standby is a duplicate machine with a shared disk that is ready to go should the primary fail.) The main server is attached via fibre channel to a SAN array with SSD drives. (15,000 RPM SAS drives could also be used). There should be dual paths from the server to the SAN, and dual controllers, battery backup, and RAID 1 drives. The hot standby system is powered up and attached to the same SAN array so that if the main server crashes, the hot standby can take over and the Synergy application can be back up and running within minutes.

|

|

When using a hot standby system, you should set system option #36 to ensure write/store/delete operations are flushed to the SAN array. (Alternatively, you can selectively use the FLUSH statement.) This prevents data corruption from occurring when the hot standby is brought into service by flushing (writing to disk) the operating system cache, which is lost when a hot standby system starts up. Flushing protects data integrity, but it can hurt write/store performance because operating system caching is effectively bypassed. If you use option #36 system-wide, consider disabling it with %OPTION for programs that write or update large amounts of data, such as day-end processing. |

|

|

Backups are generally performed using software on the SAN array, rather than the operating system, using snapshot backups of data communicating with the UNIX or Windows OS. Since thousands of users may be accessing the system, it is imperative that the synbackup utility be used to freeze I/O during backup operations to prevent corrupt ISAM files.

For another layer of redundancy, you may want to add a remote data center backup. The hot standby machine covers the case where the main server crashes. A remote data center comes into play when the main data center becomes unusable, such as after a natural disaster.

Configuring virtual machines

Virtual machines are inherently slower than equivalent physical systems because the virtualization software uses system resources and because cores on a virtual machine do not necessarily map directly to cores on the physical machine due to oversubscription. Consequently, on a virtualized system your Synergy application will not get as much CPU power as it would on a physical system.

When virtualizing systems, CPU usage has only a small overhead. I/O operations—including Toolkit, low-level windows, and most especially WPF-type graphics—slow down significantly compared to a physical system. If the latest virtual I/O hardware instructions are either not present or not enabled, the system may get even slower as more virtual CPU cores are added.

The following Xeon processors (or newer; these were introduced in 2010) will have VT-x, VT-d, and EPT required for acceptable I/O performance: Beckton (multiprocessor), Clarkdale (uniprocessor), Gainestown (dual-processor). In addition, using multiple dedicated server-class graphics cards associated with a virtual machine may help .NET WPF application performance.

On a multi-CPU socket machine, a virtual machine should be paired with a specific CPU socket to avoid negatively impacting performance.

Note the following:

- If you use non-SSD drives for a virtualized system, do not use shared virtual machine partitions on a physical drive.

- When using a virtual machine with xfServer, remember that the physical machine may share its network ports with other virtual machines because there may not be a network port dedicated to the virtual machine instance. It is preferable to use a dedicated NIC port to a machine using xfServer or SQL Server per VM.

- For most virtualized operating systems, it is preferable to turn off network packet offloading options (such as checksum offload) on the guest OS network adapter because they add extra overhead and delays that negatively affect xfServer throughput.

Design and configuration for client/server systems

On a client/server system, Synergy data is located on a remote server and, optimally, some data processing takes place on that server as well. When you move a Synergy application from a stand-alone configuration to a client/server configuration, it is likely to need significant architectural changes to approach the performance it had when stand-alone. For example, data updates and random read statements can be 100 times slower in a client/server environment than in a stand-alone environment, especially with a WAN (see Food for thought: Results from our testing).

We recommend the following:

- Use 64-bit architecture, including 64-bit terminal server clients, when possible. Use the latest version of Synergy/DE for applications on clients, and upgrade to the latest versions of server software (exchange servers, domain controllers, SQL Server, etc.).

- Ideally, clients and servers should be connected with at least Gigabit Ethernet (GbE).

- Do not use mapped drives on Windows (i.e., Microsoft SMB network shares) for data file access. They can cause performance problems and file corruption issues, particularly with multi-user access. Use xfServer instead. Generally speaking, when xfServer is properly configured, it significantly outperforms mapped drives in multi-user situations. (See Optimizing network data access with xfServer.) Synergex can provide only very limited support for Synergy database access through mapped drives. Use of a mapped drive for ISAM file access is unsupported; use xfServer instead.

- When files are on a separate server, it’s best to put file processing logic on that server as well by using xfServer with the Select class or by using xfServerPlus method calls. Otherwise, the Synergy application will use up network bandwidth by passing unnecessary data over the network rather than passing only user-selected records. Programs that transfer large amounts of data across a network (e.g., month-end or day-end processing or reports) can quickly overload a network. See Optimizing network data access with xfServer.

- For network printing, connect the user’s machine directly via the network to the printer, rather than employing a print server.

- On OpenVMS, disk I/O is not cached, and xfServer and SQL OpenNet server do not scale well. Be careful when configuring OpenVMS servers for a large number of xfServer or xfServerPlus client processes or xfODBC users. If using xfServerPlus, ensure your client application uses pooling.

Configuring Windows terminal servers

Terminal servers are machines with the Remote Desktop Services (Terminal Services) role enabled. Do not activate this role unless it is absolutely necessary. File servers, for example, should not have this role (use a separate server for data).

We recommend the following:

- Terminal servers should be 64-bit machines.

- Turn off Fair Share options (CPU scheduling, network, and disk options). These options are set by default and affect performance even when there is only one logged-on user or scheduled task. See Performance Problem when Upgrading to Server 2012, available on the Synergex blog site.

- For xfServer, consider using fewer terminal servers with more users on each. You may even be able to eliminate the need for xfServer by consolidating to a single 64-bit terminal server.

Optimizing network data access with xfServer

xfServer provides reliable, high-performance access to remote Synergy data. It manages connections to files and file locks, preventing data corruption and loss, and it can shift the load for data access from the network to the server, easing network bottlenecks. Whenever possible, do not put user-specific files, such as temporary work files, files you may sort, and print files, on the server; it is better to redirect to a local, user location.

There are two environment variables you can use with xfServer to improve performance, SCSPREFETCH and SCSCOMPR.

- Setting SCSPREFETCH turns on both prefetching, in which sequential records are fetched from the server and stored in a buffer until needed, and buffering, in which records waiting to be written to the server are buffered and then written all at once. Prefetching works only on READS. Buffering works on WRITES and PUTS; an equivalent option for sequential STOREs is the OPTIONS:“/bufstore” qualifier on the OPEN statement. For details see Prefetching and buffering records.

- Setting SCSCOMPR turns on data compression, which compresses blanks, nulls, zeros, and repeating characters in records sent between xfServer and its clients. Compression can significantly improve performance on low speed or busy networks, especially WANs.

The Select classes not only make it easier to write code that accesses Synergy data (by creating SQL-like statements), they can also improve performance because reading the ISAM files and selecting the desired records takes place on the server. Then, only the necessary records are sent over the network to the client. If you want to transmit only the necessary fields within records, you can use the Select.SparseRecord() method or the Select.Sparse class. A corresponding method, SparseUpdate(), can be used when writing data. For sparse records to be truly effective in reducing network traffic, SCSCOMPR should be set as well. Use the DBG_SELECT environment variable to determine how well your Select queries are optimized. See System-Supplied Classes for more information about the Select classes.

On a wireless network or WAN, use xfServer connection recovery (SCSKEEPCONNECT) to improve resiliency. This feature enables an xfServer client application to seamlessly reconnect to the server and recover its session context after an unexpected loss of connectivity. (See Using connection recovery (Windows).

For more information about xfServer in general, see What is xfServer?

|

|

On Windows, do not set system environment variables such as TEMP and TMP to point to an xfServer location. Doing so can cause Visual Studio and other Windows applications to crash. |

Configuring SAN for optimal data access

For best performance with Synergy ISAM data, we recommend using high-quality 15,000 RPM SAS disks or SSDs configured in RAID 1 mirror sets. (Other RAID configurations are not recommended.) SANs should use fibre channel or drives should be directly attached. Note the following:

- Use SSD drives on a SAN if you use shared disk partitions to clients on a physical LUN (block-level access).

- For SSDs, make sure the SCSI unmap function is supported and that the controller on the SAN port of the SAN does the appropriate trim-equivalent pass-through.

- NAS connections are not supported, and iSCSI connections are very slow.

- When tuning SAN arrays, we do not recommend using RAID 0 (striping), because Synergy uses 4K buffers for index operations with ISAM, 32K buffers for sequential operations, and 64K buffers when performing a SORT. If you do use RAID 0, consider a stripe size of 4K.

- On Windows, it is best to move data files and work files (TEMP:) to a non-system disk due to the overhead required for Windows filter manager. For example, on a virtual machine, consider using a separate virtual hard disk (VHD) with a separate logical unit number (LUN) for these files.

- For best performance in a virtual environment, physically attach SAN LUNs to the application server, dedicated to a VM instance.

Food for thought: Results from our testing

To illustrate how different configurations affect performance, we did some testing with similar single-user Windows and UNIX systems and an ISAM file with one million 200-byte records, one key, and data compression at 50%. (Note that although the systems were similar, they used different hardware and had different capabilities. The figures cited below are meant to show the differences between operations, not operating systems.) Here’s what we found for local (non-network) access:

|

Operation |

Records per second |

|

|---|---|---|

|

Windows |

Linux |

|

|

STORE to an ISAM file |

88,000 |

68,000 |

|

READS from an ISAM file opened in input mode |

588,000 |

1,485,000 |

|

READS from an ISAM file opened in update mode |

175,000 |

410,000 |

|

READS from an ISAM file opened in update mode with /sequential |

330,000 |

657,000 |

The following table shows the results for xfServer and network access with Gigabit Ethernet. The first four rows show local xfServer access; you can compare them with network xfServer access (in the next five rows) to see how a physical network affects performance. Results for mapped drive access are supplied for comparison purposes only; accessing Synergy data via mapped drives is not recommended.

|

Operation |

Records per second |

|---|---|

|

Local xfServer STORE |

37,000 |

|

Local xfServer buffered STORE |

69,000 |

|

Local xfServer READS without prefetch |

46,000 |

|

Local xfServer READS with prefetch |

434,000 |

|

xfServer STORE from Linux client to Windows server |

5,000 |

|

xfServer buffered STORE from Linux client to Windows server |

37,000 |

|

xfServer READS from Linux client to Windows server without prefetch |

6,000 |

|

xfServer READS from Linux client to Windows server with prefetch |

105,000 |

|

xfServer READS from Linux client to Windows server with prefetch and compression |

141,000 |

|

READS from a mapped drive with a single user |

430,000 |

|

READS from a mapped drive when a file is open for update by another user |

435 |